In an effort to use enrich touch interaction our newest paper focuses on the possibility to distinguish different fingers based on the capacities data of the front screen.

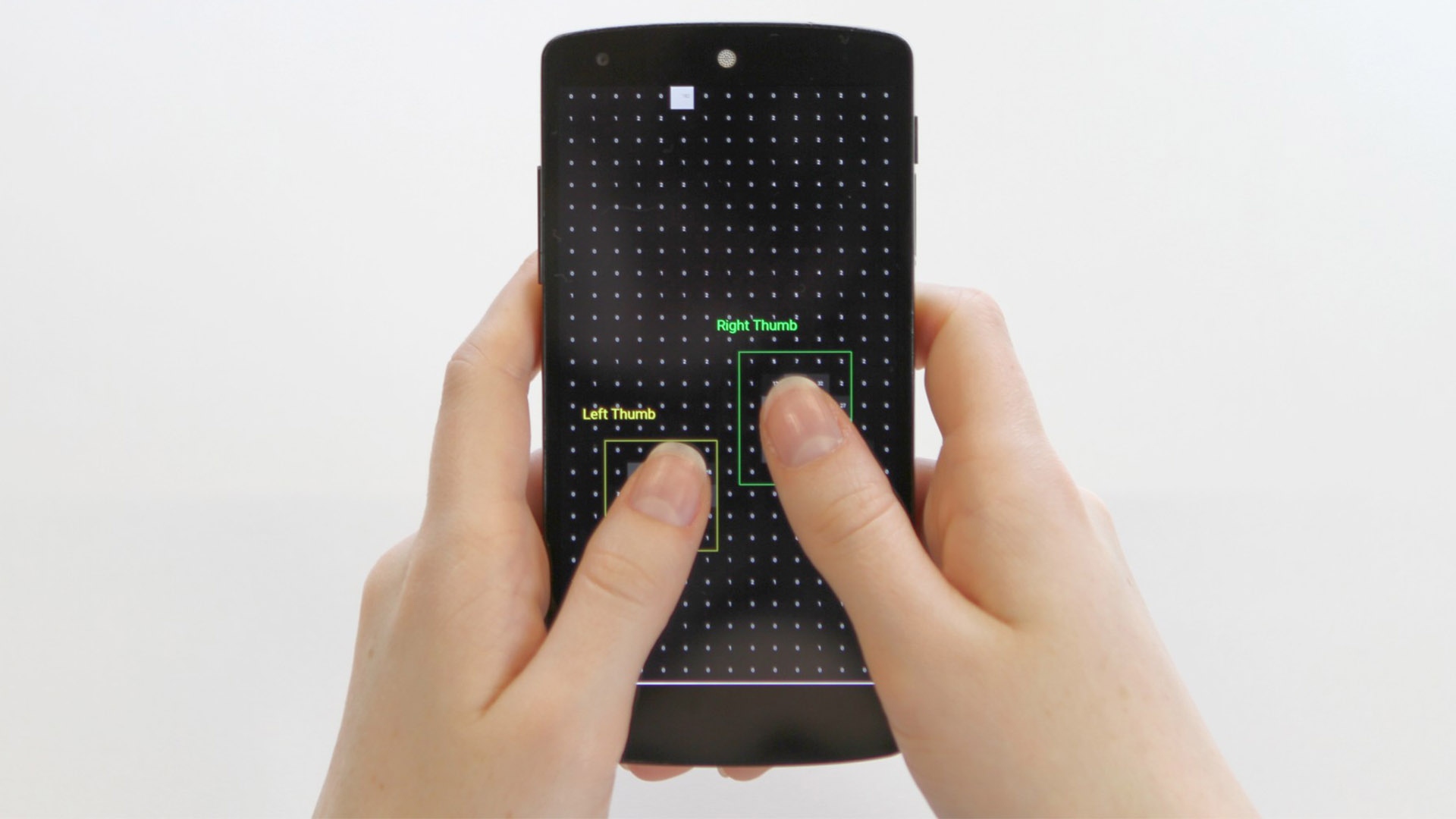

Touchscreens enable intuitive mobile interaction. However, touch input is limited to 2D touch locations which makes it challenging to provide shortcuts and secondary actions similar to hardware keyboards and mice. Previous work presented a wide range of approaches to provide secondary actions by identifying which finger touched the display. While these approaches are based on external sensors which are inconvenient, we use capacitive images from mobile touchscreens to investigate the feasibility of finger identification. We collected a dataset of low-resolution fingerprints and trained convolutional neural networks that classify touches from eight combinations of fingers. We focused on combinations that involve the thumb and index finger as these are mainly used for interaction. As a result, we achieved an accuracy of over 92 % for a position-invariant differentiation between left and right thumbs. We evaluated the model and two use cases that users find useful and intuitive. We publicly share our data set (CapFingerId) comprising 455,709 capacitive images of touches from each finger on a representative mutual capacitive touchscreen and our models to enable future work using and improving them.

Comments