2026

Chiossi, Francesco; Imamaliyev, Elnur; Bleichner, Martin G.; Mayer, Sven

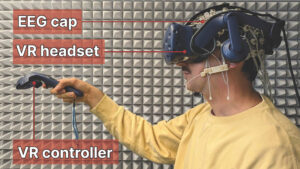

Anticipation Before Action: EEG-Based Implicit Intent Detection for Adaptive Gaze Interaction in Mixed Reality Proceedings Article Forthcoming

In: Proceedings of the 2026 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Barcelona, Spain, Forthcoming, ISBN: 979-8-4007-2278-3/2026/04.

@inproceedings{chiossi2026anticipation,

title = {Anticipation Before Action: EEG-Based Implicit Intent Detection for Adaptive Gaze Interaction in Mixed Reality},

author = { Francesco Chiossi and Elnur Imamaliyev and Martin G. Bleichner and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2026/01/chiossi2026anticipation.pdf

http://osf.io/9x6jy/},

doi = {10.1145/3772318.3790523},

isbn = {979-8-4007-2278-3/2026/04},

year = {2026},

date = {2026-04-13},

urldate = {2026-04-13},

booktitle = {Proceedings of the 2026 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {Barcelona, Spain},

series = {CHI \'26},

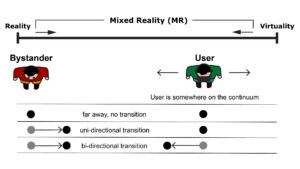

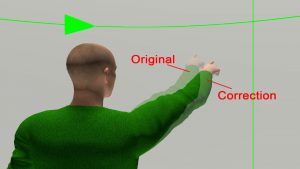

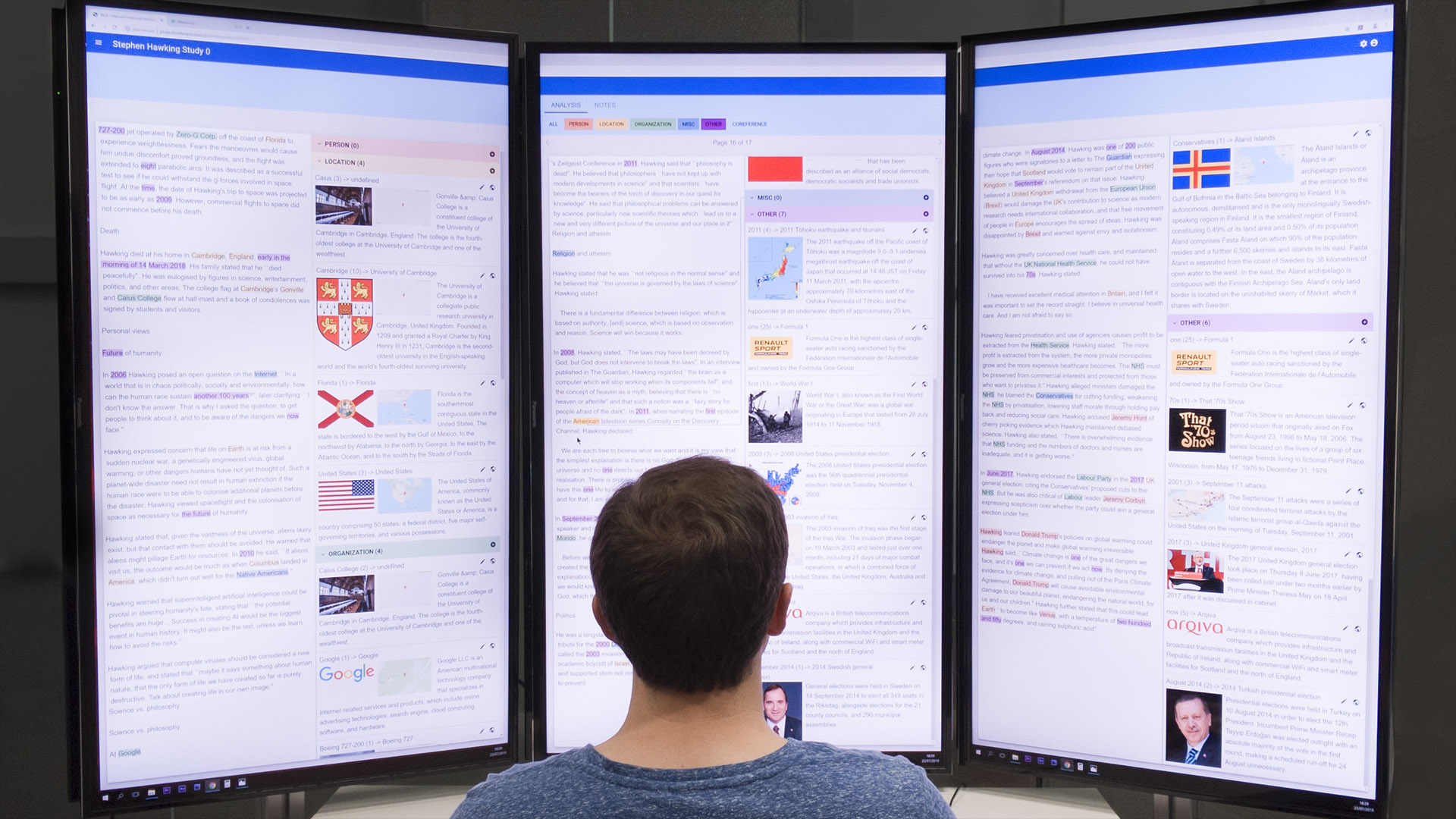

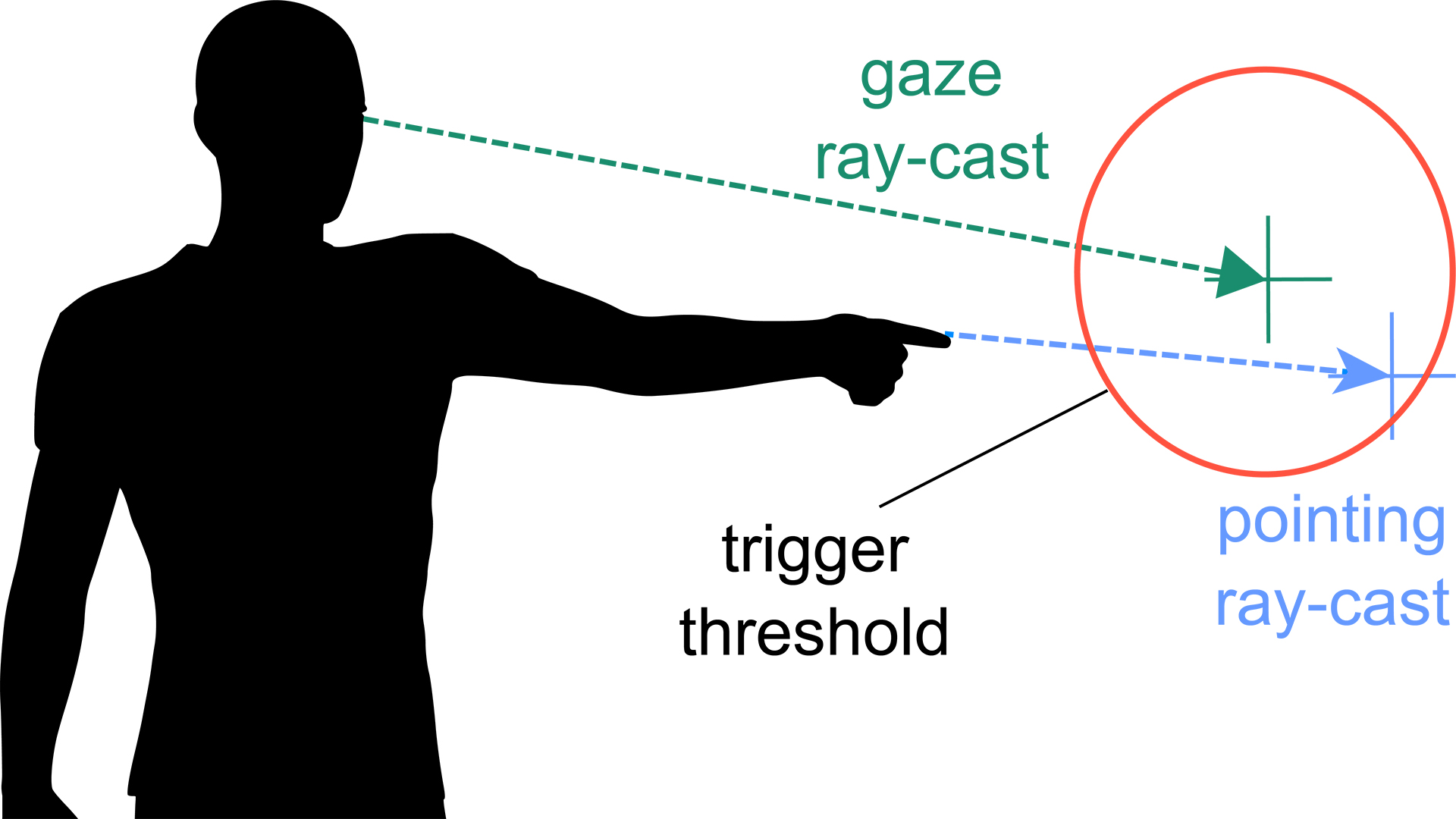

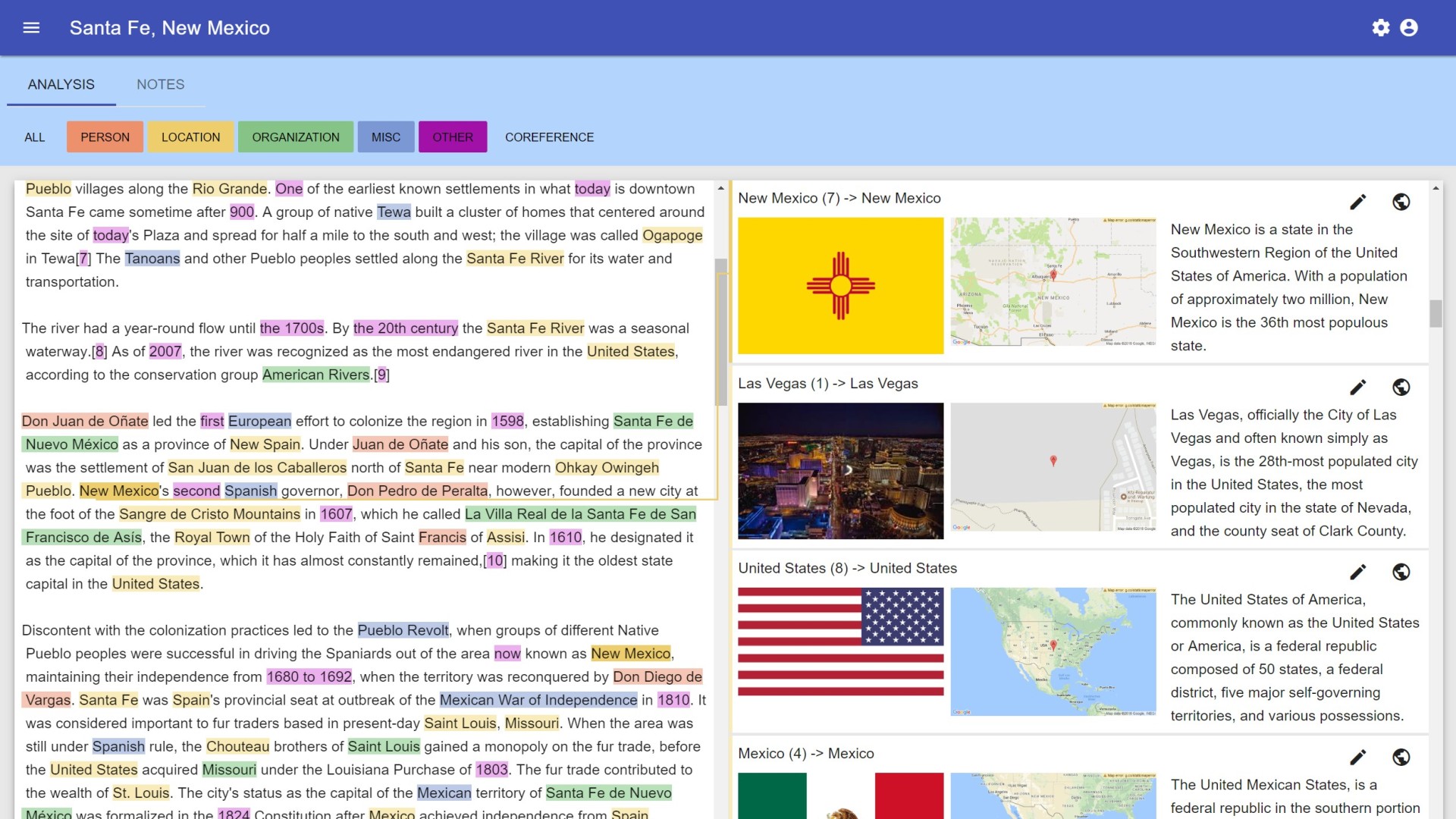

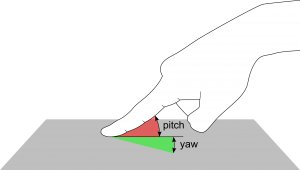

abstract = {Mixed Reality (MR) interfaces increasingly rely on gaze for interaction, yet distinguishing visual attention from intentional action remains difficult, leading to the Midas Touch problem. Existing solutions require explicit confirmations, while brain\textendashcomputer interfaces may provide an implicit marker of intention using Stimulus-Preceding Negativity (SPN). We investigated how Intention (Select vs. Observe) and Feedback (With vs. Without) modulate SPN during gaze-based MR interactions. During realistic selection tasks, we acquired EEG and eye-tracking data from 28 participants. SPN was robustly elicited and sensitive to both factors: observation without feedback produced the strongest amplitudes, while intention to select and expectation of feedback reduced activity, suggesting SPN reflects anticipatory uncertainty rather than motor preparation. Complementary decoding with deep learning models achieved reliable person-dependent classification of user intention, with accuracies ranging from 75% to 97% across participants. These findings identify SPN as an implicit marker for building intention-aware MR interfaces that mitigate the Midas Touch.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

Park, Hyerim; Huynh, Khanh; Eiband, Malin; Dillmann, Jeremy; Mayer, Sven; Sedlmair, Michael

Evaluating Generative AI in the Lab: Methodological Challenges and Guidelines Proceedings Article Forthcoming

In: Proceedings of the 31st International Conference on Intelligent User Interfaces, Association for Computing Machinery, Paphos, Cyprus, Forthcoming, ISBN: 979-8-4007-1984-4.

BibTeX | Links:

@inproceedings{park2026evaluating,

title = {Evaluating Generative AI in the Lab: Methodological Challenges and Guidelines},

author = { Hyerim Park and Khanh Huynh and Malin Eiband and Jeremy Dillmann and Sven Mayer and Michael Sedlmair},

url = {https://sven-mayer.com/wp-content/uploads/2026/01/park2026evaluating.pdf},

doi = {10.1145/3742413.3789065},

isbn = {979-8-4007-1984-4},

year = {2026},

date = {2026-03-24},

urldate = {2026-03-24},

booktitle = {Proceedings of the 31st International Conference on Intelligent User Interfaces},

publisher = {Association for Computing Machinery},

address = {Paphos, Cyprus},

series = {IUI \'26},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

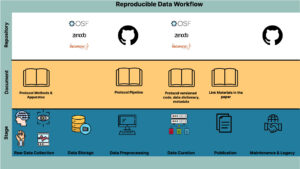

Schnizer, Kathrin; Mitrevska, Teodora; Tag, Benjamin; Ali, Abdallah El; Mayer, Sven

PhysioCHI: Lessons Learned from Implementing Human-Centered Physiological Computing Proceedings Article Forthcoming

In: Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Forthcoming.

@inproceedings{schnizer2026physiochi,

title = {PhysioCHI: Lessons Learned from Implementing Human-Centered Physiological Computing},

author = {Kathrin Schnizer and Teodora Mitrevska and Benjamin Tag and Abdallah El Ali and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2026/01/schnizer2026physiochi.pdf},

doi = {https://doi.org/10.1145/3772363.3778794},

year = {2026},

date = {2026-04-13},

urldate = {2026-04-13},

booktitle = {Proceedings of the Extended Abstracts of the CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

series = {CHI EA \'26},

abstract = {Integrating physiological signals in Human-Computer Interaction research has significantly advanced our understanding of user experiences and interactions. However, the interdisciplinary nature of this research presents numerous technical challenges. These include the lack of standardized protocols, unclear guidelines for data collection and preprocessing, and difficulties in pipeline management, reproducibility, and transparency. The purpose of this meet-up is to offer a lightweight opportunity for CHI attendees to connect around these issues, exchange experiences, share tools and workflows, and identify best practices. By fostering open exchange, we aim to improve the reliability of physiological data in HCI, promote open science, and build a sustainable community. Ultimately, our goal is to overcome technical barriers and strengthen the foundation for future research in physiological computing.},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

Townsend, Lisa L.; Rasch, Julian; Grech, Amy; Riecke, Bernhard E.; Mayer, Sven

A Tree’s Perspective: Enhancing Nature Connectedness Through Transitional and Multisensory Virtual Reality Experiences Proceedings Article Forthcoming

In: Proceedings of the 2026 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Barcelona, Spain, Forthcoming, ISBN: 979-8-4007-2278-3.

BibTeX | Links:

@inproceedings{townsend2026trees,

title = {A Tree’s Perspective: Enhancing Nature Connectedness Through Transitional and Multisensory Virtual Reality Experiences},

author = { Lisa L. Townsend and Julian Rasch and Amy Grech and Bernhard E. Riecke and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2026/02/townsend2025tree.pdf},

doi = {10.1145/3772318.3790282},

isbn = {979-8-4007-2278-3},

year = {2026},

date = {2026-04-14},

urldate = {2026-04-14},

booktitle = {Proceedings of the 2026 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {Barcelona, Spain},

series = {CHI \'26},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

2025

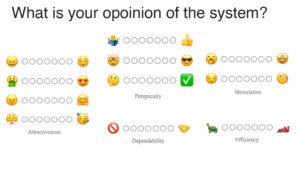

Achatz, Julia; Sailer, Pauline; Mayer, Sven; Schubert, Mark

An explainable segmentation decision tree model for enhanced decision support in roundwood sorting Journal Article

In: Knowledge-Based Systems, vol. 324, pp. 113814, 2025, ISSN: 0950-7051.

@article{achatz2025explainable,

title = {An explainable segmentation decision tree model for enhanced decision support in roundwood sorting},

author = {Julia Achatz and Pauline Sailer and Sven Mayer and Mark Schubert},

doi = {10.1016/j.knosys.2025.113814},

issn = {0950-7051},

year = {2025},

date = {2025-01-01},

urldate = {2025-01-01},

journal = {Knowledge-Based Systems},

volume = {324},

pages = {113814},

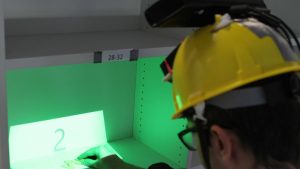

abstract = {Recent industry insights highlight the urgent need for affordable automation and Decision Support Systems (DSSs) in roundwood grading. Existing solutions are often prohibitively expensive or lack the necessary interpretability for practical application. To address these industrial needs, we propose an affordable grading system that emphasizes transparency, fosters user trust, facilitates employee training, and enables effective feature detection in line with trade standards. We introduce the Segmentation Decision Tree (SegDT) classifier\textemdasha hybrid-interpretable approach that combines instance segmentation for feature extraction with a decision tree for quality classification. To this end, we use a dataset of 1800 cross-sectional images of roundwood annotated with six different features (including the pith). To provide context, we utilize Gradient-weighted Class Activation Mapping (GradCam) to enhance the explainability of existing Convolutional Neural Networks (CNNs) in roundwood sorting, tackling their black-box nature. We compare the CNN + GradCam model with the SegDT classifier, evaluating both accuracy and explainability. In a quantitative user study, 24 participants evaluated both systems regarding user experience and trust, while also comparing them to a standard dimension-based grading system. The SegDT classifier achieved 80% accuracy in distinguishing three main quality grades, matching the performance of CNN classification models. However, the study revealed a clear user preference for SegDT over the CNN + GradCam prototype. While both approaches improve grading efficiency and accuracy, SegDT stands out for its transparency and usability, making it a strong candidate for industry adoption. For a video summary of this paper, please click here or visit https://youtu.be/A1w1rYWOWxo},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Barricelli, Barbara Rita; Campos, José Creissac; Luyten, Kris; Mayer, Sven; Palanque, Philippe; Panizzi, Emanuele; Spano, Lucio Davide; Stumpf, Simone

Engineering Interactive Systems Embedding AI Technologies (3rd workshop on) Proceedings Article

In: Companion Proceedings of the 17th ACM SIGCHI Symposium on Engineering Interactive Computing Systems, pp. 71–75, Association for Computing Machinery, Trier, Germany, 2025, ISBN: 9798400718663.

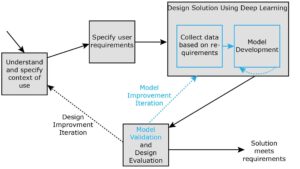

@inproceedings{barricelli2025engineering,

title = {Engineering Interactive Systems Embedding AI Technologies (3rd workshop on)},

author = {Barbara Rita Barricelli and Jos\'{e} Creissac Campos and Kris Luyten and Sven Mayer and Philippe Palanque and Emanuele Panizzi and Lucio Davide Spano and Simone Stumpf},

doi = {10.1145/3731406.3735965},

isbn = {9798400718663},

year = {2025},

date = {2025-01-01},

booktitle = {Companion Proceedings of the 17th ACM SIGCHI Symposium on Engineering Interactive Computing Systems},

pages = {71\textendash75},

publisher = {Association for Computing Machinery},

address = {Trier, Germany},

series = {EICS '25 Companion},

abstract = {This workshop proposal is the third edition of a workshop which has been organised at EICS 2023 and EICS 2024. This edition aims to bring together researchers and practitioners interested in the engineering of interactive systems that embed AI technologies (as for instance, AI-based recommender systems) or that use AI during the engineering lifecycle. The overall objective is to identify (from experience reported by participants) methods, techniques, and tools to support the use and inclusion of AI technologies throughout the engineering lifecycle for interactive systems. A specific focus is on guaranteeing that user-relevant properties such as usability and user experience are accounted for. Contributions are also expected to address system-related properties, including resilience, dependability, reliability, or performance. Another focus is on the identification and definition of software architectures supporting this integration.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

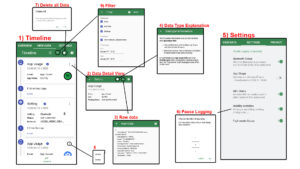

Bemmann, Florian; Koch, Timo K.; Bergmann, Maximilian; Stachl, Clemens; Buschek, Daniel; Schoedel, Ramona; Mayer, Sven

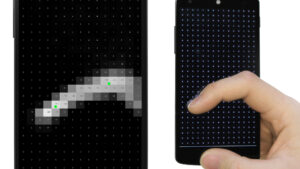

Contextualizing Smartphone-Typed Language With User Input Intention Proceedings Article

In: Proceedings of the Mensch Und Computer 2025, pp. 520–526, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 9798400715822.

@inproceedings{bemmann2025contextualizing,

title = {Contextualizing Smartphone-Typed Language With User Input Intention},

author = {Florian Bemmann and Timo K. Koch and Maximilian Bergmann and Clemens Stachl and Daniel Buschek and Ramona Schoedel and Sven Mayer},

url = {https://doi.org/10.1145/3743049.3748537},

doi = {10.1145/3743049.3748537},

isbn = {9798400715822},

year = {2025},

date = {2025-01-01},

booktitle = {Proceedings of the Mensch Und Computer 2025},

pages = {520\textendash526},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MuC '25},

abstract = {While the study of smartphone-typed language offers valuable insights for HCI and interdisciplinary research, existing data collection methods often fall short in providing context. Contextualizing text contents solely by the input target app does not allow for selecting them by the actual input intention of the user (e.g., sending a direct message, publishing a post). We present a method to study smartphone-typed language in the wild, which extracts the user’s input intention from a text field’s prompt text. Our approach enables distinguishing language contents by their channel (i.e., comments, messaging, search inputs), which allows filtering and pre-selecting text contents by the user’s input intention and type of language. We provide software libraries and the underlying categorization schema of our method. Researchers can apply this on-device in studies relying on smartphone-typed language. We outline which further opportunities for adaptation our general procedure motivates, to facilitate interdisciplinary studies in the wild.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Bemmann, Florian; Windl, Maximiliane; Knobloch, Tobias; Mayer, Sven

European Users’ In-Depth Privacy Concerns with Smartphone Data Collection Honorable Mention Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 9, no. 5, 2025.

@article{bemmann2025european,

title = {European Users’ In-Depth Privacy Concerns with Smartphone Data Collection},

author = {Florian Bemmann and Maximiliane Windl and Tobias Knobloch and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/07/bemmann2025european.pdf

https://osf.io/d3fe8/},

doi = {10.1145/3743719},

year = {2025},

date = {2025-09-01},

urldate = {2025-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {9},

number = {5},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Today\'s context-aware mobile phones allow developers to build intelligent and adaptive applications. The data demand induced by context awareness leads to decreased trust and increased privacy concerns. However, users\' deeper reasons and real-world fears that underlie these concerns are not fully understood. We conducted an online survey (N=100) and semi-structured interviews (N=20) to understand users\' concerns about smartphone data privacy. We investigated three key areas: general user understanding and misconceptions, specific in-depth concerns, and mitigation strategies. We found that effective transparency and control are the central themes across all areas. Users are concerned about privacy issues negatively impacting their lives, especially through financial loss, physical harm, or manipulation. We show that privacy measures should be implemented with a stronger focus on the user by keeping the user in the loop through transparency and control.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Bemmann, Florian; Schmidmaier, Matthias; Paneva, Viktorija; Murtezaj, Doruntina; Wiethoff, Alexander; Mayer, Sven

Workshop on Societal Effects of AI in Mobile Social Media Proceedings Article

In: Adjunct Proceedings of the 27th International Conference on Mobile Human-Computer Interaction, Association for Computing Machinery, Sharm El-Sheikh, Egypt, 2025, ISBN: 979-8-4007-1970-7/2025/09.

@inproceedings{bemmann2025workshop,

title = {Workshop on Societal Effects of AI in Mobile Social Media},

author = {Florian Bemmann and Matthias Schmidmaier and Viktorija Paneva and Doruntina Murtezaj and Alexander Wiethoff and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/08/bemmann2025workshop.pdf},

doi = {10.1145/3737821.3743750},

isbn = {979-8-4007-1970-7/2025/09},

year = {2025},

date = {2025-09-22},

urldate = {2025-09-22},

booktitle = {Adjunct Proceedings of the 27th International Conference on Mobile Human-Computer Interaction},

publisher = {Association for Computing Machinery},

address = {Sharm El-Sheikh, Egypt},

series = {MobileHCI \'25 Adjunct},

abstract = {Social media platforms constitute an essential part of many people\'s mobile device usage. Their contents have adapted to the mobile form factor, e.g., through an increase of short-form video and content recommendation instead of navigation and active selection. Social media systems thereby have a strong influence on individuals and society, for example, concerning public discourse and opinion-making. The rise of AI-generated content and LLM-backed autonomous agents even pushes such developments. This workshop discusses social media\'s recent developments and yielding positive and negative effects on our society. Participants will share their perspectives of HCI research on social media systems and the research aims they are pursuing. In this workshop, we outline opportunities in joining insights from social sciences with the potential of recent developments in Human-Computer Interaction approaches. Interface design ideas will be explored and discussed with the research community, synthesizing collective challenges, promising future directions, and strategies for research that mitigate the negative effects of AI in social media systems on our society.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

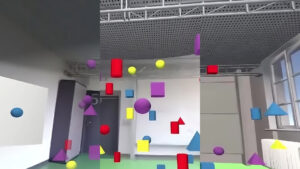

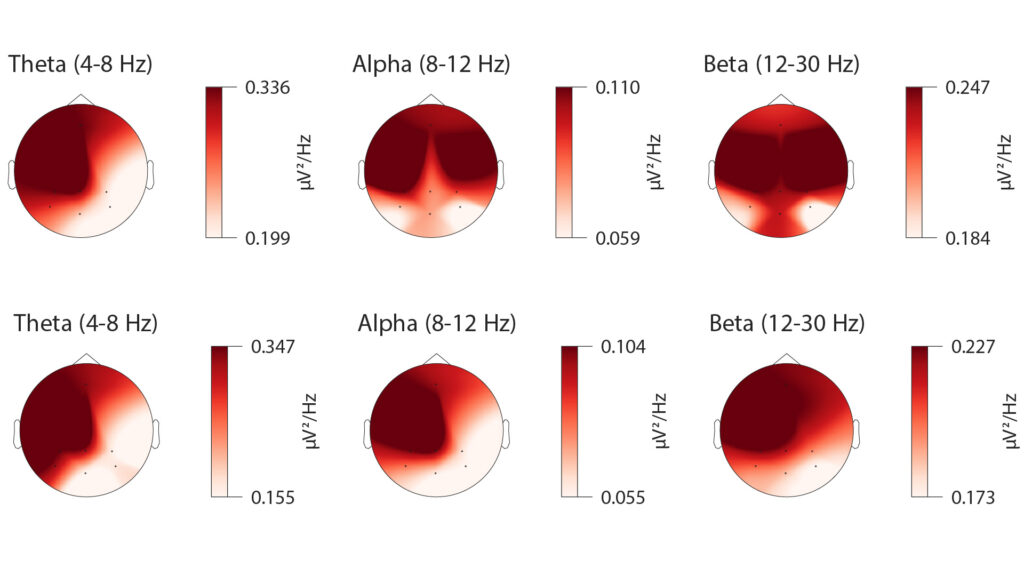

Chiossi, Francesco; Ou, Changkun; Gerhardt, Carolina; Putze, Felix; Mayer, Sven

Designing and Evaluating an Adaptive Virtual Reality System using EEG Frequencies to Balance Internal and External Attention States Journal Article

In: International Journal of Human-Computer Studies, pp. 103433, 2025, ISSN: 1071-5819.

@article{chiossi2024designing,

title = {Designing and Evaluating an Adaptive Virtual Reality System using EEG Frequencies to Balance Internal and External Attention States},

author = {Francesco Chiossi and Changkun Ou and Carolina Gerhardt and Felix Putze and Sven Mayer},

url = {https://www.sciencedirect.com/science/article/pii/S1071581924002167},

doi = {10.1016/j.ijhcs.2024.103433},

issn = {1071-5819},

year = {2025},

date = {2025-02-01},

urldate = {2024-01-01},

journal = {International Journal of Human-Computer Studies},

pages = {103433},

abstract = {Virtual reality (VR) finds various applications in productivity, entertainment, and training, often requiring substantial working memory and attentional resources. Effective task performance in VR relies on prioritizing relevant information and suppressing distractions through internal attention. However, current VR systems fail to account for the impact of working memory loads, leading to over or under-stimulation. In this work, we designed an adaptive system using EEG correlates of external and internal attention to support working memory tasks. Participants engaged in a visual working memory N-Back task, where we adapted the visual complexity of distracting elements. Our study demonstrated that EEG frontal theta and parietal alpha frequency bands effectively adjust dynamic visual complexity. The adaptive system improved task performance and reduced perceived workload compared to a reverse adaptation. These results highlight the potential of EEG-based adaptive systems tobalance distraction management and maintain user engagement without causing cognitive overload.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Foo, Michelle Xiao-Lin; Aslan, Ilhan; Mayer, Sven

Designing and Evaluating In-Situ Assistive Features to Anticipate Text-Based Responses of Conversational Agents Best Paper Proceedings Article Forthcoming

In: Proceedings of the 16th Biannual Conference of the Italian SIGCHI Chapter, Association for Computing Machinery, Salerno, Italy, Forthcoming, ISBN: 979-8-4007-2102-1/25/10.

BibTeX | Links:

@inproceedings{foo2025insitu,

title = {Designing and Evaluating In-Situ Assistive Features to Anticipate Text-Based Responses of Conversational Agents},

author = {Michelle Xiao-Lin Foo and Ilhan Aslan and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/10/foo2025designing.pdf},

doi = {10.1145/3750069.3750140},

isbn = {979-8-4007-2102-1/25/10},

year = {2025},

date = {2025-10-06},

urldate = {2025-10-06},

booktitle = {Proceedings of the 16th Biannual Conference of the Italian SIGCHI Chapter},

publisher = {Association for Computing Machinery},

address = {Salerno, Italy},

series = {CHItaly 2025},

keywords = {},

pubstate = {forthcoming},

tppubtype = {inproceedings}

}

Grootjen, Jesse W.; Yadla, Sairam Narsimha Reddy; Mayer, Sven

The Effects of AMD Severities on Eye Movements in Virtual Environments Proceedings Article

In: Mensch und Computer 2025, pp. To appear, Association for Computing Machinery, New York, NY, USA location = Chemnitz, Germany, 2025, ISBN: 979-8-4007-1582-2/25/08.

BibTeX | Links:

@inproceedings{grootjen2025effects,

title = {The Effects of AMD Severities on Eye Movements in Virtual Environments},

author = {Jesse W. Grootjen and Sairam Narsimha Reddy Yadla and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/08/grootjen2025effects.pdf},

doi = {10.1145/3743049.3748561},

isbn = {979-8-4007-1582-2/25/08},

year = {2025},

date = {2025-08-01},

urldate = {2025-08-01},

booktitle = {Mensch und Computer 2025},

pages = {To appear},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA location = Chemnitz, Germany},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Grootjen, Jesse W.; Weiss, Yannick; Daniel, Tobias; Kahman, Fabian; Mayer, Sven

Effects of Cataracts Severities on Eye Movements and Task Performance During a Visual Search Task Through Virtual Reality Simulations Proceedings Article

In: Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-2015-4/25/12.

BibTeX | Links:

@inproceedings{grootjen2025effects,

title = {Effects of Cataracts Severities on Eye Movements and Task Performance During a Visual Search Task Through Virtual Reality Simulations},

author = {Jesse W. Grootjen and Yannick Weiss and Tobias Daniel and Fabian Kahman and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/11/grootjen2025effects.pdf},

doi = {10.1145/3771882.3771884},

isbn = {979-8-4007-2015-4/25/12},

year = {2025},

date = {2025-12-01},

urldate = {2025-12-01},

booktitle = {Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MUM \'25},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

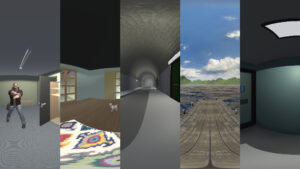

Grootjen, Jesse W.; Yadla, Sairam Narsimha Reddy; Mayer, Sven

Understanding the Impact of Glaucoma Severities Through Virtual Reality Simulations Proceedings Article

In: Proceedings of Mensch und Computer 2025, Association for Computing Machinery, Chemnitz, Germany, 2025.

@inproceedings{grootjen2025understanding,

title = {Understanding the Impact of Glaucoma Severities Through Virtual Reality Simulations},

author = {Jesse W. Grootjen and Sairam Narsimha Reddy Yadla and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/08/grootjen2025understanding.pdf

https://osf.io/b6xpu/},

doi = {10.1145/10.1145/3743049.3743082},

year = {2025},

date = {2025-08-31},

urldate = {2025-08-31},

booktitle = {Proceedings of Mensch und Computer 2025},

publisher = {Association for Computing Machinery},

address = {Chemnitz, Germany},

series = {MuC\'25},

abstract = {Glaucoma is a visual impairment that presents a significant global challenge due to limited access to routine screenings. In this study, we explore glaucoma by creating a virtual reality (VR) simulation of peripheral vision loss. Initial interviews (N=3) indicated that traditional darker peripheral visualizations do not accurately represent the experiences of individuals with glaucoma. To address this, we developed an innovative visualization that simulates varying severities of glaucoma, aligning more closely with the descriptions provided by interviewees. In a subsequent user study (N=24), we examined the impact of different glaucoma severities using our visualization in high-fidelity VR environments, which helped maintain contextual cues. Specifically, we analyzed how glaucoma affects visual search tasks and investigated correlations between eye movement patterns and the severity of the simulated condition. Our results revealed significant differences in both eye movement metrics and task performance. These findings provide a foundation for future simulation studies on glaucoma.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Huynh, Khanh; Dillmann, Jeremy; Mayer, Sven

Spatial Referencing for Large Language Models in Automotive Navigation Tasks Proceedings Article

In: Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-2015-4/25/12.

BibTeX | Links:

@inproceedings{huynh2025spatial,

title = {Spatial Referencing for Large Language Models in Automotive Navigation Tasks},

author = {Khanh Huynh and Jeremy Dillmann and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/11/huynh2025spatial.pdf},

doi = {10.1145/3771882.3771917},

isbn = {979-8-4007-2015-4/25/12},

year = {2025},

date = {2025-12-01},

urldate = {2025-12-01},

booktitle = {Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MUM \'25},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Keppel, Jonas; Strauss, Marvin; Haliburton, Luke; Weingärtner, Henrike; Dominiak, Julia; Faltaous, Sarah; Gruenefeld, Uwe; Mayer, Sven; Woźniak, Paweł W.; Schneegass, Stefan

Situated Artifacts Amplify Engagement in Physical Activity Proceedings Article

In: Proceedings of the 2025 ACM Designing Interactive Systems Conference, pp. 2959–2974, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 9798400714856.

@inproceedings{keppel2025situated,

title = {Situated Artifacts Amplify Engagement in Physical Activity},

author = {Jonas Keppel and Marvin Strauss and Luke Haliburton and Henrike Weing\"{a}rtner and Julia Dominiak and Sarah Faltaous and Uwe Gruenefeld and Sven Mayer and Pawe\l W. Wo\'{z}niak and Stefan Schneegass},

url = {https://doi.org/10.1145/3715336.3735690},

doi = {10.1145/3715336.3735690},

isbn = {9798400714856},

year = {2025},

date = {2025-01-01},

booktitle = {Proceedings of the 2025 ACM Designing Interactive Systems Conference},

pages = {2959\textendash2974},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {DIS '25},

abstract = {In the context of rising sedentary lifestyles, this paper investigates the efficacy of “Situated Artifacts” in promoting physical activity. We designed two artifacts that display users’ physical activity data within their homes \textendash one physical and one digital. We conducted a 9-week, counterbalanced, within-subject field study with N = 24 participants to assess the impact of these artifacts on physical activity, reflection, and motivation. We collected quantitative data on physical activity and administered daily and weekly questionnaires, employing individual Likert items and standardized instruments, as well as conducted interviews post-prototype usage. Our findings indicate that while both artifacts act as reminders for physical activity, the physical artifact was superior in terms of user engagement. The study revealed that this can be attributed to the higher perceived presence and, thereby, enhanced social interaction, which acts as a motivational source for activity. In this sense, situated artifacts gently nudge toward sustainable health behavior change.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Kraus, Matthias; Zepf, Sebastian; Westhaeusser, Rebecca; Feustel, Isabel; Zargham, Nima; Aslan, Ilhan; Edwards, Justin; Mayer, Sven; Konto, Dimitrios; Wagner, Nicolas; Andre, Elisabeth

BEHAVE AI: BEst Practices and Guidelines for Human-Centric Design and EvAluation of ProactiVE AI Agents Proceedings Article

In: 30th ACM International Conference on Intelligent User Interfaces Companion (IUI '25 Companion), Association for Computing Machinery, Cagliari, Italy, 2025, ISBN: 979-8-4007-1409-2/25/03.

BibTeX | Links:

@inproceedings{kraus2025behave,

title = {BEHAVE AI: BEst Practices and Guidelines for Human-Centric Design and EvAluation of ProactiVE AI Agents},

author = { Matthias Kraus and Sebastian Zepf and Rebecca Westhaeusser and Isabel Feustel and Nima Zargham and Ilhan Aslan and Justin Edwards and Sven Mayer and Dimitrios Konto and Nicolas Wagner and Elisabeth Andre},

doi = {10.1145/3708557.3716155},

isbn = {979-8-4007-1409-2/25/03},

year = {2025},

date = {2025-01-01},

booktitle = {30th ACM International Conference on Intelligent User Interfaces Companion (IUI '25 Companion)},

publisher = {Association for Computing Machinery},

address = {Cagliari, Italy},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Landgrebe, Vivien; Bouquet, Elizabeth; Au, Simon; Mayer, Sven

Augmenting or Immersing? The Impact of Technology Choice on Cognitive Load and Enjoyment in Escape Rooms Proceedings Article

In: Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-2015-4/25/12.

BibTeX | Links:

@inproceedings{landgrebe2025augmenting,

title = {Augmenting or Immersing? The Impact of Technology Choice on Cognitive Load and Enjoyment in Escape Rooms},

author = {Vivien Landgrebe and Elizabeth Bouquet and Simon Au and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/11/landgrebe2025augmenting.pdf},

doi = {10.1145/3771882.3771887},

isbn = {979-8-4007-2015-4/25/12},

year = {2025},

date = {2025-12-01},

urldate = {2025-12-01},

booktitle = {Proceedings of the 24th International Conference on Mobile and Ubiquitous Multimedia},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MUM \'25},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

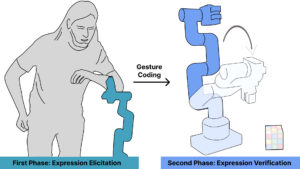

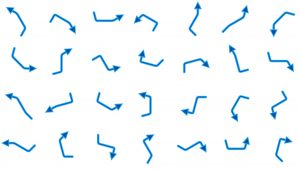

Leusmann, Jan; Villa, Steeven; Liang, Thomas; Wang, Chao; Schmidt, Albrecht; Mayer, Sven

An Approach to Elicit Human-Understandable Robot Expressions to Support Human-Robot Interaction Proceedings Article

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Japan, 2025, ISBN: 979-8-4007-1394-1/25/04.

@inproceedings{leusmann2025approach,

title = {An Approach to Elicit Human-Understandable Robot Expressions to Support Human-Robot Interaction},

author = {Jan Leusmann and Steeven Villa and Thomas Liang and Chao Wang and Albrecht Schmidt and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/leusmann2025approach.pdf},

doi = {10.1145/3706598.3713085},

isbn = {979-8-4007-1394-1/25/04},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {Japan},

series = {CHI \'25},

abstract = {Understanding the intentions of robots is essential for natural and seamless human-robot collaboration. Ensuring that robots have means for non-verbal communication is a basis for intuitive and implicit interaction. For this, we describe an approach to elicit and design human-understandable robot expressions. We outline the approach in the context of non-humanoid robots. We paired human mimicking and enactment with research from gesture elicitation in two phases: first, to elicit expressions, and second, to ensure they are understandable. We present an example application through two studies (N=16 \& N=260) of our approach to elicit expressions for a simple 6-DoF robotic arm. We show that the approach enabled us to design robot expressions that signal curiosity and interest in getting attention. Our main contribution is an approach to generate and validate understandable expressions for robots, enabling more natural human-robot interaction.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Leusmann, Jan; Villa, Steeven; Berberoglu, Burak; Wang, Chao; Mayer, Sven

Developing and Validating the Perceived System Curiosity Scale (PSC): Measuring Users' Perceived Curiosity of Systems Honorable Mention Proceedings Article

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-1394-1/25/04.

@inproceedings{leusmann2025developing,

title = {Developing and Validating the Perceived System Curiosity Scale (PSC): Measuring Users\' Perceived Curiosity of Systems},

author = {Jan Leusmann and Steeven Villa and Burak Berberoglu and Chao Wang and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/leusmann2025developing.pdf},

doi = {10.1145/3706598.3713087},

isbn = {979-8-4007-1394-1/25/04},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI \'25},

abstract = {Like humans, today\'s systems, such as robots and voice assistants, can express curiosity to learn and engage with their surroundings. While curiosity is a well-established human trait that enhances social connections and drives learning, no existing scales assess the perceived curiosity of systems. Thus, we introduce the Perceived System Curiosity (PSC) scale to determine how users perceive curious systems. We followed a standardized process of developing and validating scales, resulting in a validated 12-item scale with 3 individual sub-scales measuring explorative, investigative, and social dimensions of system curiosity. In total, we generated 831 items based on literature and recruited 414 participants for item selection and 320 additional participants for scale validation. Our results show that the PSC scale has inter-item reliability and convergent and construct validity. Thus, this scale provides an instrument to explore how perceived curiosity influences interactions with technical systems systematically.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Leusmann, Jan; Prajod, Pooja; Nguyen, Alex Binh Vinh Duc; Pascher, Max; Moere, Andrew Vande; Mayer, Sven

The Future of Human-Robot Synergy in Interactive Environments: The Role of Robots at the Workplace Proceedings Article

In: Adjunct Proceedings of the 4th Annual Symposium on Human-Computer Interaction for Work, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 9798400713972.

@inproceedings{leusmann2025future,

title = {The Future of Human-Robot Synergy in Interactive Environments: The Role of Robots at the Workplace},

author = {Jan Leusmann and Pooja Prajod and Alex Binh Vinh Duc Nguyen and Max Pascher and Andrew Vande Moere and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/07/leusmann2025future.pdf},

doi = {10.1145/3707640.3729210},

isbn = {9798400713972},

year = {2025},

date = {2025-01-01},

urldate = {2025-01-01},

booktitle = {Adjunct Proceedings of the 4th Annual Symposium on Human-Computer Interaction for Work},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHIWORK \'25 Adjunct},

abstract = {The increasing integration of robots into workplaces raises critical questions about human-robot synergy in interactive environments. While robots are designed to enhance productivity and safety, their successful deployment depends on effective collaboration, trust, and seamless interaction with human workers. However, existing research has primarily focused on either technical capabilities or human-centered concerns in isolation, leaving a gap in understanding how robots can be meaningfully integrated into dynamic workspaces. In this workshop, we bring together experts from robotics, HCI, and work sciences to explore the future of human-robot collaboration at the workplace. This workshop aims to identify key design principles, ethical considerations, and practical challenges. The insights gained will inform future research and policy recommendations, shaping a future in which robots act not as mere tools but as cooperative agents that enhance workplace efficiency, well-being, and innovation.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Leusmann, Jan; Belardinelli, Anna; Haliburton, Luke; Hasler, Stephan; Schmidt, Albrecht; Mayer, Sven; Gienger, Michael; Wang, Chao

Investigating LLM-Driven Curiosity in Human-Robot Interaction Proceedings Article

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-1394-1/25/04.

@inproceedings{leusmann2025investigating,

title = {Investigating LLM-Driven Curiosity in Human-Robot Interaction},

author = {Jan Leusmann and Anna Belardinelli and Luke Haliburton and Stephan Hasler and Albrecht Schmidt and Sven Mayer and Michael Gienger and Chao Wang},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/leusmann2025investigating.pdf},

doi = {10.1145/3706598.3713923},

isbn = {979-8-4007-1394-1/25/04},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI \'25},

abstract = {Integrating curious behavior traits into robots is essential for them to learn and adapt to new tasks over their lifetime and to enhance human-robot interaction. However, the effects of robots expressing curiosity on user perception, user interaction, and user experience in collaborative tasks are unclear. In this work, we present a Multimodal Large Language Model-based system that equips a robot with non-verbal and verbal curiosity traits. We conducted a user study (N = 20) to investigate how these traits modulate the robot’s behavior and the users’ impressions of sociability and quality of interaction. Participants prepared cocktails or pizzas with a robot, which was either curious or non-curious. Our results show that we could create user-centric curiosity, which users perceived as more human-like, inquisitive, and autonomous while resulting in a longer interaction time. We contribute a set of design recommendations allowing system designers to take advantage of curiosity in collaborative tasks.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

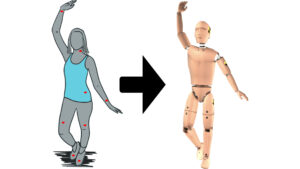

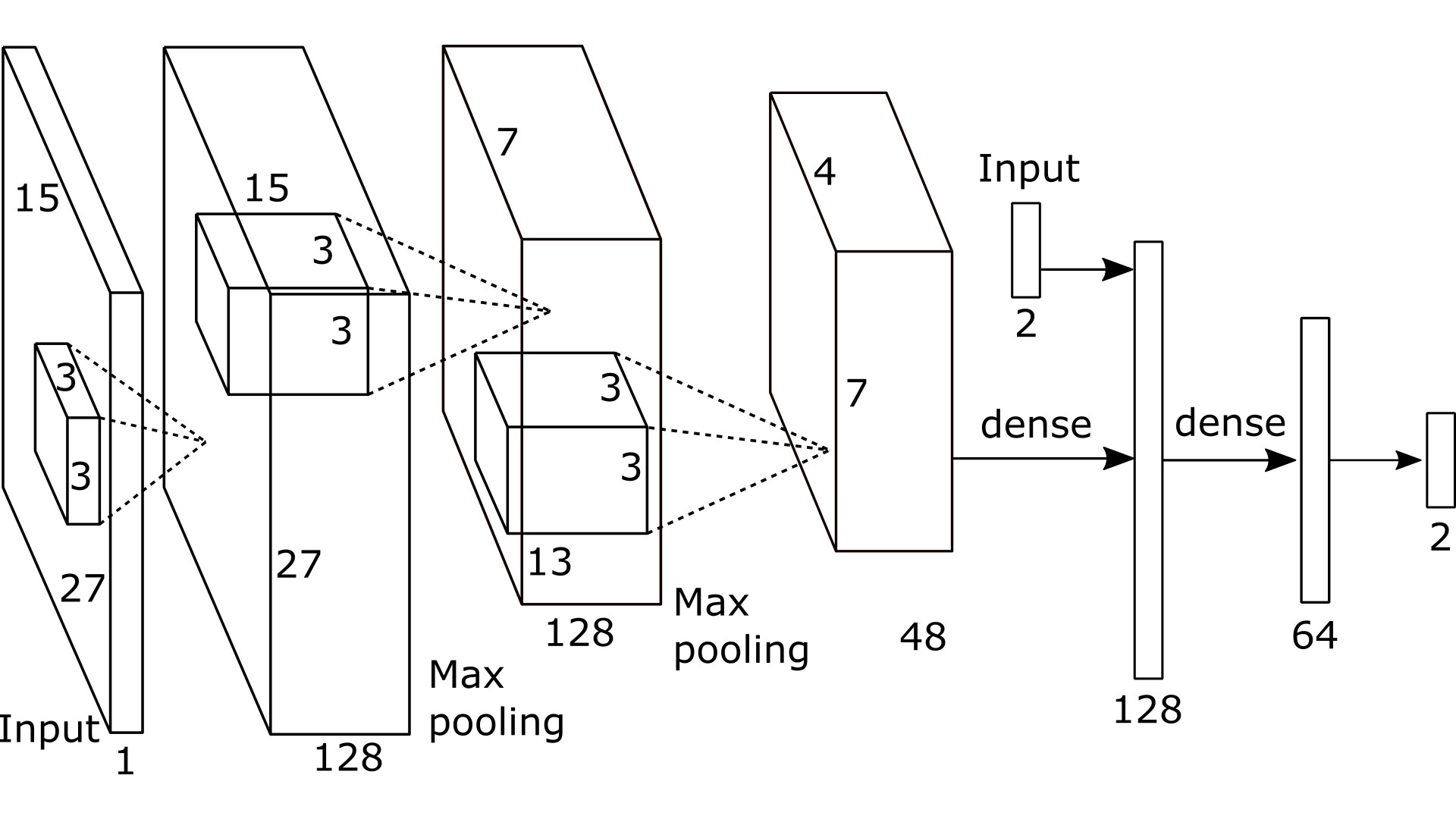

Leusmann, Jan; Felder, Ludwig; Wang, Chao; Mayer, Sven

Understanding Preferred Robot Reaction Times for Human-Robot Handovers Supported by a Deep Learning System Proceedings Article

In: 2025 20th ACM/IEEE International Conference on Human-Robot Interaction, IEEE/ACM, 2025.

@inproceedings{leusmann2025understanding,

title = {Understanding Preferred Robot Reaction Times for Human-Robot Handovers Supported by a Deep Learning System},

author = {Jan Leusmann and Ludwig Felder and Chao Wang and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/01/leusmann2025understanding.pdf

https://osf.io/gn49s/},

year = {2025},

date = {2025-03-06},

urldate = {2025-03-06},

booktitle = {2025 20th ACM/IEEE International Conference on Human-Robot Interaction},

publisher = {IEEE/ACM},

series = {HRI},

abstract = {Human-human handovers are natural and seamless. To be able to do this, humans optimize towards many factors. One of them is the timing when receiving an object. However, the preferred robot reaction time in Human-Robot handovers is currently unclear. To understand the preferred robot reaction time, we trained a Space-Time-Separable Graph Convolutional Network (STS-GCN) model using motion capture data of human-human handovers. We deployed this system on a robotic arm with live depth camera data. We conducted a user study (N=20) with five robot reaction times. We found that users perceived an early prediction as preferred. Furthermore, we found that designers can adapt this timing to their needs based on six sub-components of user perception. We contribute a ready-to-deploy handover classification model, a preferred handover time for our system, and an approach to determine the preferred robot reaction time for robotic systems.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Mitrevska, Teodora; Chiossi, Francesco; Mayer, Sven

ERP Markers of Visual and Semantic Processing in AI-Generated Images: From Perception to Meaning Proceedings Article

In: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, Yokohama, Japan, 2025.

@inproceedings{mitrevska2025erp,

title = {ERP Markers of Visual and Semantic Processing in AI-Generated Images: From Perception to Meaning},

author = { Teodora Mitrevska and Francesco Chiossi and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/mitrevska2025erp.pdf

https://osf.io/b89f6/},

doi = {10.1145/3706599.3719907},

year = {2025},

date = {2025-04-28},

urldate = {2025-04-28},

booktitle = {Extended Abstracts of the CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {Yokohama, Japan},

series = {CHI EA \'25},

abstract = {Perceptual similarity assessment plays an important role in processing visual information, which is often employed in Human-AI interaction tasks such as object recognition or content generation. It is important to understand how humans perceive and evaluate visual similarity to iteratively generate outputs that meet the users\' expectations better and better. By leveraging physiological signals, systems can rely on users\' EEG responses to support the similarity assessment process. We conducted a study (N=20), presenting diverse AI-generated images as stimuli and evaluating their semantic similarity to a target image while recording event-related potentials (ERPs). Our results show that the P2 and N400 component distinguishes medium, and high similarity of images, while the low similarity of images did not show a significant impact. Thus, we demonstrate that ERPs allow us to assess the users\' perceived visual similarity to support rapid interactions with human-AI systems. },

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Mitrevska, Teodora; Tag, Benjamin; Perusquía-Hernández, Monica; Niijima, Arinobu; Sidenmark, Ludwig; Solovey, Erin T.; Ali, Abdallah El; Mayer, Sven; Chiossi, Francesco

SIG PhysioCHI: Human-Centered Physiological Computing in Practice Proceedings Article

In: Extended Abstracts of the CHI Conference on Human Factors in Computing Systems, ACM, Yokohama, Japan, 2025, ISBN: 979-8-4007-1395-8/25/04.

@inproceedings{mitrevska2025sig,

title = {SIG PhysioCHI: Human-Centered Physiological Computing in Practice},

author = {Teodora Mitrevska and Benjamin Tag and Monica Perusqu\'{i}a-Hern\'{a}ndez and Arinobu Niijima and Ludwig Sidenmark and Erin T. Solovey and Abdallah El Ali and Sven Mayer and Francesco Chiossi},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/mitrevska2025sig.pdf},

doi = {10.1145/3706599.3716289},

isbn = {979-8-4007-1395-8/25/04},

year = {2025},

date = {2025-04-01},

urldate = {2025-04-01},

booktitle = {Extended Abstracts of the CHI Conference on Human Factors in Computing Systems},

publisher = {ACM},

address = {Yokohama, Japan},

series = {CHI EA \'25},

abstract = {In recent years, integrating physiological signals in Human-Computer Interaction research has significantly advanced our understanding of user experiences and interactions. However, the interdisciplinary nature of this research presents numerous challenges, including the need for standardized protocols and reporting guidelines. By fostering cross-disciplinary collaboration, we seek to enhance the reproducibility, transparency, and ethical considerations of physiological data in HCI. The purpose of this SIG is to offer a lightweight opportunity for CHI attendees to connect with the community around the center point of physiological computing. This SIG will address key topics such as technical challenges, ethical implications, reproducibility, and open science. We aim to meet as a community and connect with HCI researchers and practitioners to network and exchange bi-directional ideas. Ultimately, our goal is to create a foundation for future research and to establish a community around physiological computing.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Nguyen, Alex Binh Vinh Duc; Leusmann, Jan; Mayer, Sven; Moere, Andrew Vande

Eliciting Understandable Architectonic Gestures for Robotic Furniture through Co-Design Improvisation Proceedings Article

In: 2025 20th ACM/IEEE International Conference on Human-Robot Interaction, IEEE/ACM, 2025.

@inproceedings{nguyen2025eliciting,

title = {Eliciting Understandable Architectonic Gestures for Robotic Furniture through Co-Design Improvisation},

author = {Alex Binh Vinh Duc Nguyen and Jan Leusmann and Sven Mayer and Andrew Vande Moere},

url = {https://sven-mayer.com/wp-content/uploads/2025/01/nguyen2025eliciting.pdf

https://arxiv.org/abs/2501.01813},

year = {2025},

date = {2025-03-06},

urldate = {2025-03-06},

booktitle = {2025 20th ACM/IEEE International Conference on Human-Robot Interaction},

publisher = {IEEE/ACM},

series = {HRI},

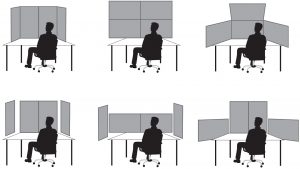

abstract = {The vision of adaptive architecture proposes that robotic technologies could enable interior spaces to physically transform in a bidirectional interaction with occupants. Yet, it is still unknown how this interaction could unfold in an understandable way. Inspired by HRI studies where robotic furniture gestured intents to occupants by deliberately positioning or moving in space, we hypothesise that adaptive architecture could also convey intents through gestures performed by a mobile robotic partition. To explore this design space, we invited 15 multidisciplinary experts to join co-design improvisation sessions, where they manually manoeuvred a deactivated robotic partition to design gestures conveying six architectural intents that varied in purpose and urgency. Using a gesture elicitation method alongside motion-tracking data, a Laban-based questionnaire, and thematic analysis, we identified 20 unique gestural strategies. Through categorisation, we introduced architectonic gestures as a novel strategy for robotic furniture to convey intent by indexically leveraging its spatial impact, complementing the established deictic and emblematic gestures. Our study thus represents an exploratory step toward making the autonomous gestures of adaptive architecture more legible. By understanding how robotic gestures are interpreted based not only on their motion but also on their spatial impact, we contribute to bridging HRI with Human-Building Interaction research.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Oechsner, Carl; Leusmann, Jan; Welsch, Robin; Butz, Andreas; Mayer, Sven

Influence of Perceived Danger on Proxemics in Human-Robot Object Handovers Proceedings Article

In: Proceedings of Mensch und Computer 2025, Association for Computing Machinery, Chemnitz, Germany, 2025.

@inproceedings{oechsner2025influence,

title = {Influence of Perceived Danger on Proxemics in Human-Robot Object Handovers},

author = {Carl Oechsner and Jan Leusmann and Robin Welsch and Andreas Butz and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/08/oechsner2025influence.pdf

https://osf.io/v3ypb},

doi = {10.1145/3743049.3743064},

year = {2025},

date = {2025-08-31},

urldate = {2025-08-31},

booktitle = {Proceedings of Mensch und Computer 2025},

publisher = {Association for Computing Machinery},

address = {Chemnitz, Germany},

series = {MuC\'25},

abstract = {As robots increasingly share human environments, we need to understand how their behavioral parameters affect our perceptions of safety and interaction quality. To explore this, we conducted a user study (N=48) investigating the relationship between approach speed, stopping distance, and the perceived danger of the object itself in a robot-human handover situation. Participants iteratively adjusted the speed and distance of a robot handing them items of varying danger categories to find a combination they considered optimal. We found a significant impact of the delivered item\'s perceived danger index on speed and distance preferences and could identify a linear dependency. By eliciting user preferences for these parameters, we can provide guidelines for adaptable robotic interactions that are considered safe, thus contributing to the design of spaces where robots and humans can coexist seamlessly, emphasizing user experience, trust, and effective collaboration.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Oechsner, Carl; Leusmann, Jan; Zhang, Xuedong; Weber, Thomas; Mayer, Sven

A Vision for Room-scale AI Interaction Proceedings Article

In: Workshop on BEst Practices and Guidelines for Human-Centric Design and EvAluation of ProactiVE AI Agents, CEUR Workshop Proceedings, 2025.

@inproceedings{oechsner2025vision,

title = {A Vision for Room-scale AI Interaction},

author = {Carl Oechsner and Jan Leusmann and Xuedong Zhang and Thomas Weber and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/oechsner2025vision.pdf},

year = {2025},

date = {2025-03-24},

urldate = {2025-03-24},

booktitle = {Workshop on BEst Practices and Guidelines for Human-Centric Design and EvAluation of ProactiVE AI Agents},

publisher = {CEUR Workshop Proceedings},

series = {BEHAVE AI\'25},

abstract = {Interactive systems often burden users with micromanagement, requiring them to oversee and control every detail of the interaction. This limits scalability and efficiency in interacting with environments. We address this by presenting a vision for room-scale AI, where interactive environments are enriched with AI functionality to facilitate natural, context-aware interaction. Leveraging multi-agent systems, tasks can be divided into smaller components, enabling AI agent-powered devices to proactively assess situations, support users, and adapt to the environment as needed. This paradigm shift transforms the environment into an active collaborator. We present the potential of room-scale AI through use cases and highlight its broader implications. Finally, we outline key challenges and research directions to advance this transformative concept, aiming to reimagine how users interact with and are supported by intelligent environments.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Schmidmaier, Matthias; Schöberl, Lukas; Rupp, Jonathan; Mayer, Sven

A Systematic and Validated Translation of the Perceived Empathy of Technology Scale from English to German Proceedings Article

In: Proceedings of Mensch und Computer 2025, Association for Computing Machinery, Chemnitz, Germany, 2025.

@inproceedings{schmidmaier2025systematic,

title = {A Systematic and Validated Translation of the Perceived Empathy of Technology Scale from English to German},

author = {Matthias Schmidmaier and Lukas Sch\"{o}berl and Jonathan Rupp and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/08/schmidmaier2025systematic.pdf},

doi = {10.1145/3743049.3743082},

year = {2025},

date = {2025-08-31},

urldate = {2025-08-31},

booktitle = {Proceedings of Mensch und Computer 2025},

publisher = {Association for Computing Machinery},

address = {Chemnitz, Germany},

series = {MuC\'25},

abstract = {Empathic interaction is becoming increasingly important in human-AI interaction, particularly for applications in emotional and mental health support. As these technologies expand globally, culturally and linguistically adapted evaluation tools become essential, as research shows that emotional processing and empathic responses are stronger in one\'s native language. We present a systematic translation and validation of the Perceived Empathy of Technology Scale (PETS) from English to German, following a comprehensive back-translation methodology. Our process included multiple independent translations, expert group discussions, and validation with $N=400$ participants across both languages. Through confirmatory factor analysis and measurement invariance testing, we demonstrate that the German PETS maintains the two-factor structure of the original scale with excellent reliability and achieves configural, metric, and scalar invariance across languages. This validated German PETS enables researchers and developers to accurately assess how German-speaking users perceive the empathic behavior of technological systems, supporting the development of culturally appropriate empathic technologies while further establishing a methodological foundation for future scale translations in HCI.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Schmidmaier, Matthias; Rupp, Jonathan; Harrich, Cedrik; Mayer, Sven

Using Nonverbal Cues in Empathic Multi-Modal LLM-Driven Chatbots for Mental Health Support Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 9, no. 5, 2025.

@article{schmidmaier2025using,

title = {Using Nonverbal Cues in Empathic Multi-Modal LLM-Driven Chatbots for Mental Health Support},

author = {Matthias Schmidmaier and Jonathan Rupp and Cedrik Harrich and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/07/schmidmaier2025using.pdf

https://github.com/kaiaka/mllm-chatbot.git},

doi = {10.1145/3743724},

year = {2025},

date = {2025-09-01},

urldate = {2025-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {9},

number = {5},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Despite their popularity in providing digital mental health support, mobile conversational agents primarily rely on verbal input, which limits their ability to respond to emotional expressions. We therefore envision using the sensory equipment of today\'s devices to increase the nonverbal, empathic capabilities of chatbots. We initially validated that multi-modal LLMs (MLLM) can infer emotional expressions from facial expressions with high accuracy. In a user study (N=200), we then investigated the effects of such multi-modal input on response generation and perceived system empathy in emotional support scenarios. We found significant effects on cognitive and affective dimensions of linguistic expression in system responses, yet no significant increases in perceived empathy. Our research demonstrates the general potential of using nonverbal context to adapt LLM response behavior, providing input for future research on augmented interaction in empathic MLLM-based systems.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Schmidmaier, Matthias; Rupp, Jonathan; Mayer, Sven

Using a Secondary Channel to Display the Internal Empathic Resonance of LLM-Driven Agents for Mental Health Support Proceedings Article

In: Proceedings of the 27th International Conference on Multimodal Interaction, pp. 294–304, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 9798400714993.

@inproceedings{schmidmaier2025usingb,

title = {Using a Secondary Channel to Display the Internal Empathic Resonance of LLM-Driven Agents for Mental Health Support},

author = {Matthias Schmidmaier and Jonathan Rupp and Sven Mayer},

url = {https://doi.org/10.1145/3716553.3750759},

doi = {10.1145/3716553.3750759},

isbn = {9798400714993},

year = {2025},

date = {2025-01-01},

booktitle = {Proceedings of the 27th International Conference on Multimodal Interaction},

pages = {294\textendash304},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {ICMI '25},

abstract = {Conversational agents are becoming increasingly popular for digital mental health support. However, while empathy is essential for effective emotional support, the unimodal request-response interaction of such systems limits empathic communication. We address this limitation through a secondary channel that displays an agent’s inner reflections, similar to how nonverbal feedback in human interaction conveys cognitive and emotional states. We implemented a chatbot that generates not only conversational responses but also describes internal reasoning and emotional resonance. A user study involving N = 188 participants indicated a statistically significant increase in perceived empathy ( (+14.7%) ) when the agent’s internal reflections were displayed. Our findings demonstrate a practical method to enhance empathic interaction with LLM-based chatbots in empathy-critical contexts. Additionally, this work opens possibilities for multimodal systems where LLM-generated reflections may serve as input for generating nonverbal feedback.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

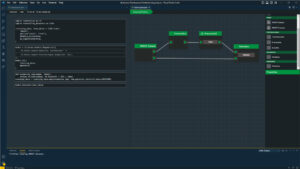

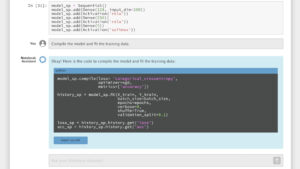

Weber, Thomas; Elagroudy, Passant; Palanque, Philippe; Mayer, Sven

Empowering the End-User – Software Development with LLMs Proceedings Article

In: 2025.

@inproceedings{weber2025empowering,

title = {Empowering the End-User \textendash Software Development with LLMs},

author = {Thomas Weber and Passant Elagroudy and Philippe Palanque and Sven Mayer},

url = {https://ceur-ws.org/Vol-3978/short-s5-00.pdf

https://www.hcilab.org/ai4eud-2025/},

year = {2025},

date = {2025-06-16},

urldate = {2025-06-16},

journal = {Joint Proceedings of the Workshops, Work in Progress Demos and Doctoral Consortium at the IS-EUD 2025 co-located with the 9th International Symposium on End-User Development},

series = {IS-EUD 2025},

abstract = {Software is essential across many domains, yet many users lack the ability to create or modify code, limiting their digital agency and creative potential. End-User Development (EUD) seeks to bridge this gap, but traditional approaches struggle to balance ease of use with the expressiveness of programming languages. Recent advances in Generative AI (GenAI), particularly Large Language Models, offer promising opportunities to simplify code creation with more natural interaction. This workshop brings together researchers and practitioners in EUD, AI-assisted development, and Human-Computer interaction to explore how we can utilize GenAI to give end-user developers tools that maintain the expressiveness of modern programming languages, are easy to use and approachable, and also allow end-users to create high-quality, reliable software without requiring extensive software engineering expertise. Through the contributions of the workshop participants and hands-on exploration of different paradigms and presentations, we will assess the status of knowledge and chart a path forward toward a widely accessible, natural way to create software. Ultimately, by broadening the people\'s capability for software development, we aim to increase digital literacy, agency, and participation.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Windl, Maximiliane; Laboda, Zsofia Petra; Mayer, Sven

Designing Effective Consent Mechanisms for Spontaneous Interactions in Augmented Reality Proceedings Article

In: Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2025, ISBN: 979-8-4007-1394-1/25/04.

BibTeX | Links:

@inproceedings{windl2025designing,

title = {Designing Effective Consent Mechanisms for Spontaneous Interactions in Augmented Reality},

author = {Maximiliane Windl and Zsofia Petra Laboda and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2025/03/windl2025designing.pdf

https://osf.io/ukxw7/},

doi = {10.1145/3706598.3713519},

isbn = {979-8-4007-1394-1/25/04},

year = {2025},

date = {2025-04-26},

urldate = {2025-04-26},

booktitle = {Proceedings of the 2025 CHI Conference on Human Factors in Computing Systems},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI \'25},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

2024

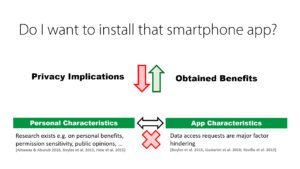

Bemmann, Florian; Mayer, Sven

The Impact of Data Privacy on Users' Smartphone App Adoption Decisions Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. MHCI, 2024.

@article{bemmann2024impact,

title = {The Impact of Data Privacy on Users\' Smartphone App Adoption Decisions},

author = {Florian Bemmann and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2024/11/bemmann2024impact.pdf},

doi = {10.1145/3676525},

year = {2024},

date = {2024-09-01},

urldate = {2024-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {MHCI},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Rich user information is gained through user tracking and power mobile smartphone applications. Apps thereby become aware of the user and their context, enabling intelligent and adaptive applications. However, such data poses severe privacy risks. Although users are only partially aware of them, awareness increases with the proliferation of privacy-enhancing technologies. How privacy literacy and raising privacy concerns affect app adoption is unclear; however, we hypothesize that it leads to a lower adoption rate of data-heavy smartphone apps, as non-usage often is the user\'s only option to protect themselves. We conducted a survey (N=100) to investigate the relationship between privacy-relevant app- and publisher characteristics with the users\' intention to install and use it. We found that users are especially critical of contentful data types and apps with rights to perform actions on their behalf. On the other hand, the expectation of a productive benefit induced by the app can increase the app-adoption intention. Our findings show which aspects designers of privacy-enhancing technologies should focus on to meet the demand for more user-centered privacy.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

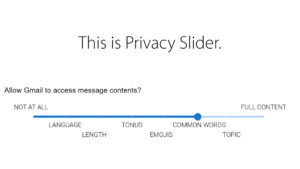

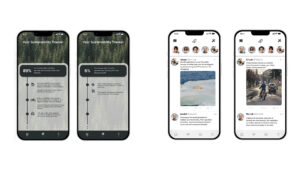

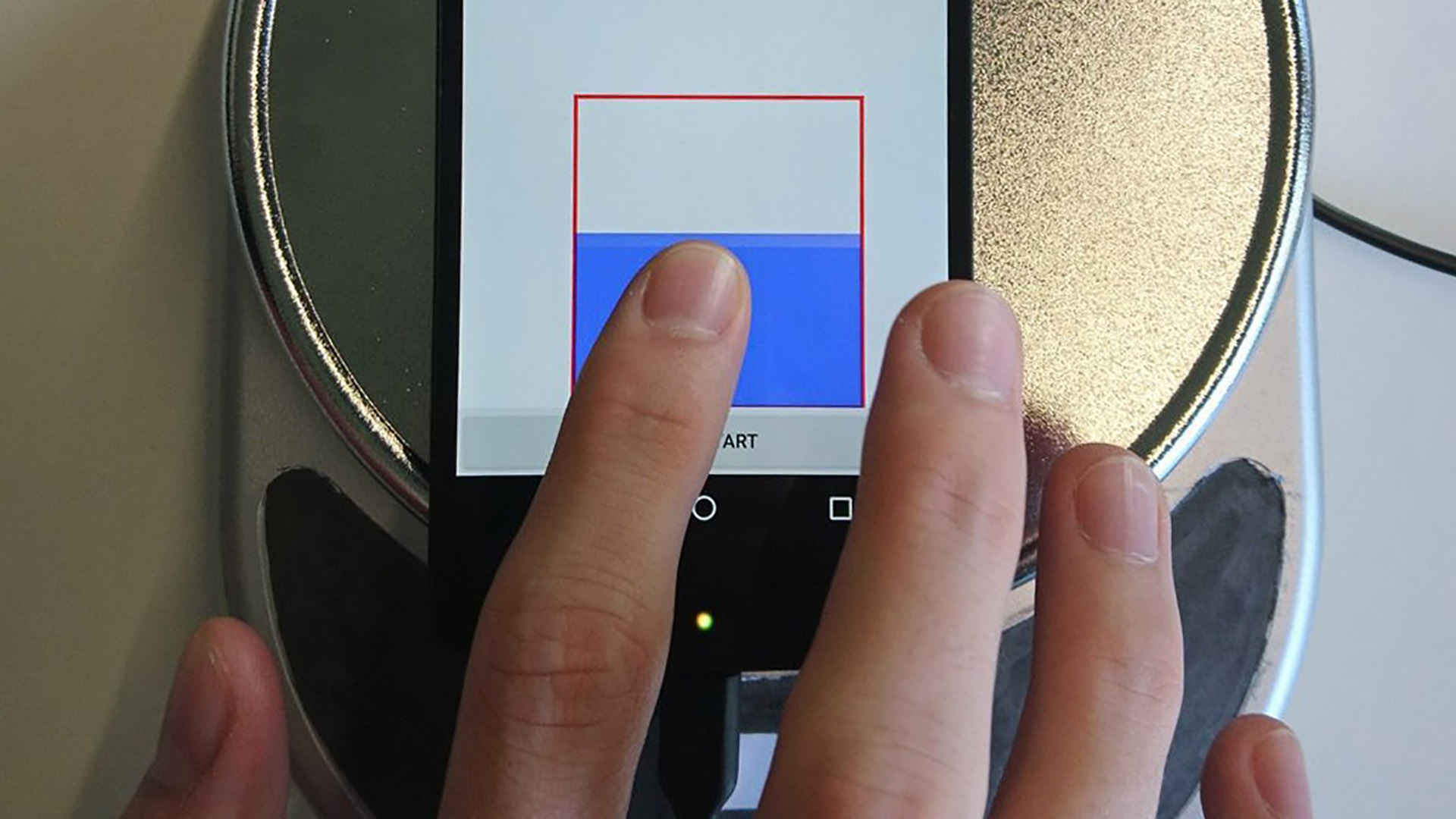

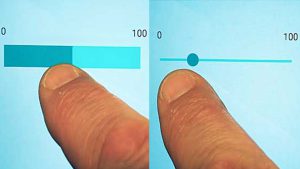

Bemmann, Florian; Stoll, Helena; Mayer, Sven

Privacy Slider: Fine-Grain Privacy Control for Smartphones Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. MHCI, 2024.

@article{bemmann2024privacy,

title = {Privacy Slider: Fine-Grain Privacy Control for Smartphones},

author = {Florian Bemmann and Helena Stoll and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2024/11/bemmann2024privacy.pdf},

doi = {10.1145/3676519},

year = {2024},

date = {2024-09-01},

urldate = {2024-09-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {MHCI},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Today, users are constrained by binary choices when configuring permissions. These binary choices contrast with the complex data collected, limiting user control and transparency. For instance, weather applications do not need exact user locations when merely inquiring about local weather conditions. We envision sliders to empower users to fine-tune permissions. First, we ran two online surveys (N=123 \& N=109) and a workshop (N=5) to develop the initial design of Privacy Slider. After the implementation phase, we evaluated our functional prototype using a lab study (N=32). The results show that our slider design for permission control outperforms today\'s system concerning all measures, including control and transparency.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Chiossi, Francesco; Ou, Changkun; Mayer, Sven

Optimizing Visual Complexity for Physiologically-Adaptive VR Systems: Evaluating a Multimodal Dataset using EDA, ECG and EEG Features Best Paper Proceedings Article

In: International Conference on Advanced Visual Interfaces 2024, Association for Computing Machinery, New York, NY, USA, 2024.

@inproceedings{chiossi2023optimizing,

title = {Optimizing Visual Complexity for Physiologically-Adaptive VR Systems: Evaluating a Multimodal Dataset using EDA, ECG and EEG Features},

author = {Francesco Chiossi and Changkun Ou and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2024/04/chiossi2024optimizing.pdf

https://github.com/mimuc/avi24-adaptation-dataset},

doi = {10.1145/3656650.3656657},

year = {2024},

date = {2024-06-03},

urldate = {2024-06-03},

booktitle = {International Conference on Advanced Visual Interfaces 2024},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {AVI\'24},

abstract = {Physiologically-adaptive Virtual Reality systems dynamically adjust virtual content based on users’ physiological signals to enhance interaction and achieve specific goals. However, as different users’ cognitive states may underlie multivariate physiological patterns, adaptive systems necessitate a multimodal evaluation to investigate the relationship between input physiological features and target states for efficient user modeling. Here, we investigated a multimodal dataset (EEG, ECG, and EDA) while interacting with two different adaptive systems adjusting the environmental visual complexity based on EDA. Increased visual complexity led to increased alpha power and alpha-theta ratio, reflecting increased mental fatigue and workload. At the same time, EDA exhibited distinct dynamics with increased tonic and phasic components. Integrating multimodal physiological measures for adaptation evaluation enlarges our understanding of the impact of system adaptation on users’ physiology and allows us to account for it and improve adaptive system design and optimization algorithms.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Chiossi, Francesco; Stepanova, Ekaterina R; Tag, Benjamin; Perusquia-Hernandez, Monica; Kitson, Alexandra; Dey, Arindam; Mayer, Sven; Ali, Abdallah El

PhysioCHI: Towards Best Practices for Integrating Physiological Signals in HCI Proceedings Article

In: Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems, Association for Computing Machinery, New York, NY, USA, 2024.

@inproceedings{chiossi2023physiochi,

title = {PhysioCHI: Towards Best Practices for Integrating Physiological Signals in HCI},

author = { Francesco Chiossi and Ekaterina R Stepanova and Benjamin Tag and Monica Perusquia-Hernandez and Alexandra Kitson and Arindam Dey and Sven Mayer and Abdallah El Ali},

url = {https://sven-mayer.com/wp-content/uploads/2024/04/chiossi2024physiochi.pdf},

doi = {10.1145/3613905.3636286},

year = {2024},

date = {2024-05-11},

urldate = {2024-05-11},

booktitle = {Extended Abstracts of the 2024 CHI Conference on Human Factors in Computing Systems},

journal = {arXiv e-prints},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {CHI EA \'24},

abstract = {Recently, we saw a trend toward using physiological signals in interactive systems. These signals, offering deep insights into users\' internal states and health, herald a new era for HCI. However, as this is an interdisciplinary approach, many challenges arise for HCI researchers, such as merging diverse disciplines, from understanding physiological functions to design expertise. Also, isolated research endeavors limit the scope and reach of findings. This workshop aims to bridge these gaps, fostering cross-disciplinary discussions on usability, open science, and ethics tied to physiological data in HCI. In this workshop, we will discuss best practices for embedding physiological signals in interactive systems. Through collective efforts, we seek to craft a guiding document for best practices in physiological HCI research, ensuring that it remains grounded in shared principles and methodologies as the field advances.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

Chiossi, Francesco; Ou, Changkun; Putze, Felix; Mayer, Sven

Detecting Internal and External Attention in Virtual Reality: A Comparative Analysis of EEG Classification Methods Proceedings Article

In: Proceedings of the International Conference on Mobile and Ubiquitous Multimedia, pp. 381–395, Association for Computing Machinery, New York, NY, USA, 2024, ISBN: 9798400712838.

@inproceedings{chiossi2024detecting,

title = {Detecting Internal and External Attention in Virtual Reality: A Comparative Analysis of EEG Classification Methods},

author = {Francesco Chiossi and Changkun Ou and Felix Putze and Sven Mayer},

url = {https://doi.org/10.1145/3701571.3701579},

doi = {10.1145/3701571.3701579},

isbn = {9798400712838},

year = {2024},

date = {2024-01-01},

booktitle = {Proceedings of the International Conference on Mobile and Ubiquitous Multimedia},

pages = {381\textendash395},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

series = {MUM '24},

abstract = {Future VR environments envision adaptive and personalized interactions. To this aim, attention detection in VR settings would allow for diverse applications and improved usability. However, attention-aware VR systems based on EEG data suffer from long training periods, hindering generalizability and widespread adoption. This work addresses the challenge of person-independent, training-free VR BCI classifying internal and external attention in VR. We compared the performance of four classifiers on an EEG dataset (N=24) featuring internal and external attention labeled classes. With the goal of online adaptation, we tested overall accuracy, different window lengths of the data, and training split to optimize the trade-off between window length and classification accuracy. Our results show that models using a complete EEG band combination consistently achieve the highest accuracy, with Linear Discriminant Analysis particularly benefiting from full-band data. The window length impacts most models’ performance with short windows. LDA achieved optimal accuracy around 6.3 seconds, SVM and NN around 6.5 and 6 seconds, respectively, and RF reached stability at 6 seconds. Lastly, increasing training data ratios improved accuracy gains consistently across models. We discuss the potential of machine learning to model EEG correlates of internal and external attention as online inputs for adaptive VR systems.},

keywords = {},

pubstate = {published},

tppubtype = {inproceedings}

}

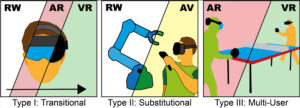

Chiossi, Francesco; Khaoudi, Yassmine El; Ou, Changkun; Sidenmark, Ludwig; Zaky, Abdelrahman; Feuchtner, Tiare; Mayer, Sven

Evaluating Typing Performance in Different Mixed Reality Manifestations using Physiological Features Journal Article

In: Proc. ACM Hum.-Comput. Interact., vol. 8, no. ISS, 2024.

@article{chiossi2024evaluating,

title = {Evaluating Typing Performance in Different Mixed Reality Manifestations using Physiological Features},

author = {Francesco Chiossi and Yassmine El Khaoudi and Changkun Ou and Ludwig Sidenmark and Abdelrahman Zaky and Tiare Feuchtner and Sven Mayer},

url = {https://osf.io/x7v9w},

doi = {10.1145/3698142},

year = {2024},

date = {2024-10-01},

urldate = {2024-10-01},

journal = {Proc. ACM Hum.-Comput. Interact.},

volume = {8},

number = {ISS},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

abstract = {Mixed reality enables users to immerse themselves in high-workload interaction spaces like office work scenarios. We envision physiologically adaptive systems that can move users into different mixed reality manifestations, to improve their focus on the primary task. However, it is unclear which manifestation is most conducive for high productivity and engagement. In this work, we evaluate whether physiological indicators for engagement can be discriminated for different manifestations. For this, we engaged participants in a typing task in three different mixed reality manifestations (augmented reality, augmented virtuality, virtual reality) and monitored physiological correlates (EEG, ECG, and eye tracking) of users\' engagement and workload. We found that users achieved best typing performances in augmented reality and augmented virtuality. At the same time, physiological engagement peaked in augmented virtuality, while workload decreased. We conclude that augmented virtuality strikes a good balance between the different manifestations, as it facilitates displaying the physical keyboard for improved typing performance and, at the same time, allows one to block out the real world, removing many real-world distractors.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

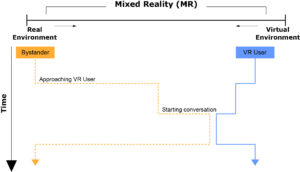

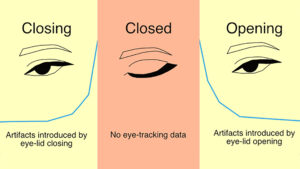

Chiossi, Francesco; Trautmannsheimer, Ines; Ou, Changkun; Gruenefeld, Uwe; Mayer, Sven

Searching Across Realities: Investigating ERPs and Eye-Tracking Correlates of Visual Search in Mixed Reality Journal Article

In: IEEE Transactions on Visualization and Computer Graphics, 2024.

@article{chiossi2024searching,

title = {Searching Across Realities: Investigating ERPs and Eye-Tracking Correlates of Visual Search in Mixed Reality},

author = {Francesco Chiossi and Ines Trautmannsheimer and Changkun Ou and Uwe Gruenefeld and Sven Mayer},

url = {https://sven-mayer.com/wp-content/uploads/2024/08/chiossi2024searching.pdf

https://osf.io/zdpty/},

doi = {10.1109/TVCG.2024.3456172},

year = {2024},

date = {2024-10-21},

urldate = {2024-10-21},

journal = {IEEE Transactions on Visualization and Computer Graphics},

abstract = {Mixed Reality allows us to integrate virtual and physical content into users\' environments seamlessly. Yet, how this fusion affects perceptual and cognitive resources and our ability to find virtual or physical objects remains uncertain. Displaying virtual and physical information simultaneously might lead to divided attention and increased visual complexity, impacting users\' visual processing, performance, and workload. In a visual search task, we asked participants to locate virtual and physical objects in Augmented Reality and Augmented Virtuality to understand the effects on performance. We evaluated search efficiency and attention allocation for virtual and physical objects using event-related potentials, fixation and saccade metrics, and behavioral measures. We found that users were more efficient in identifying objects in Augmented Virtuality, while virtual objects gained saliency in Augmented Virtuality. This suggests that visual fidelity might increase the perceptual load of the scene. Reduced amplitude in distractor positivity ERP, and fixation patterns supported improved distractor suppression and search efficiency in Augmented Virtuality. We discuss design implications for mixed reality adaptive systems based on physiological inputs for interaction.},

keywords = {},

pubstate = {published},

tppubtype = {article}

}

Chiossi, Francesco; Gruenefeld, Uwe; Hou, Baosheng James; Newn, Joshua; Ou, Changkun; Liao, Rulu; Welsch, Robin; Mayer, Sven