Today’s voice assistants lack fine-grained contextual awareness, requiring users to be unambiguous in their voice commands. In a smart home setting, one cannot simply say “turn that up” without providing extra context, even when the object of use would be obvious to humans in the room (for example, when watching TV, cooking on a stove, listening to music on a sound system). This problem is particularly bad in mobile voice interactions, where users are on the go, and the physical context is constantly changing. Even with GPS, mobile voice agents cannot resolve questions like “when does this close?” or “what is the rating of this restaurant?”

Users are often directly looking at the objects they are inquiring about. This real-world gaze location is an obvious source of contextual information that could both resolve ambiguities in spoken commands and enable more rapid and human-like interactions. Unfortunately, prior gaze-augmented voice agents have required environments to be pre-registered or otherwise constrained, and most often employ head-worn sensors to capture gaze – clearly impractical for consumer use and popular form factors such as smartphones.

We have developed a breakthrough new approach for sensing real-world gaze location for use with mobile voice agents. Critically, our implementation is software only, requiring no new hardware or modification of the environment. It works in both indoor scenes as well as outdoor streetscapes while walking. To achieve this, we simultaneously open the front and rear cameras of a smartphone, offering a combined field of view of just over 200 degrees on the latest generation of smartphones. The front-facing camera is used to track the head in 3D, including its direction vector. As the geometry of the front and back cameras is fixed and known, we can cast the head vector into the 3D world scene as captured by the rear-facing camera.

Our software allows a user to intuitively define an object or region of interest using their head gaze. Voice assistants can then use this extra contextual information to make inquiries that are more precise and natural.

In addition to streetscape questions, such as “is this restaurant good?”, WorldGaze can also facilitate rapid interactions in density instrumented smart environments, including automatically resolving otherwise ambiguous actions, such as “go,” “play,” and “stop.” We also believe WorldGaze could help to socialize mobile AR experiences, currently typified by people walking down the street looking down at their devices. WorldGaze could also help people better engage with the world and the people around them, while still offering powerful digital interactions through voice.

Streetscapes

It is not uncommon to see people walking down the street looking at their smartphones. With sufficiently wide-angle lenses, WorldKit could allow for natural, rapid, and targeted voice inquiries. For example, a user could look at a store front and ask, “when does this open?” WorldGaze fills in the ambiguous “this” with the target business, allowing the voice agent to reply intelligently. Similarly, a user could ask “what is the rating for this place” or even “make me a reservation for 2 at 7pm”.

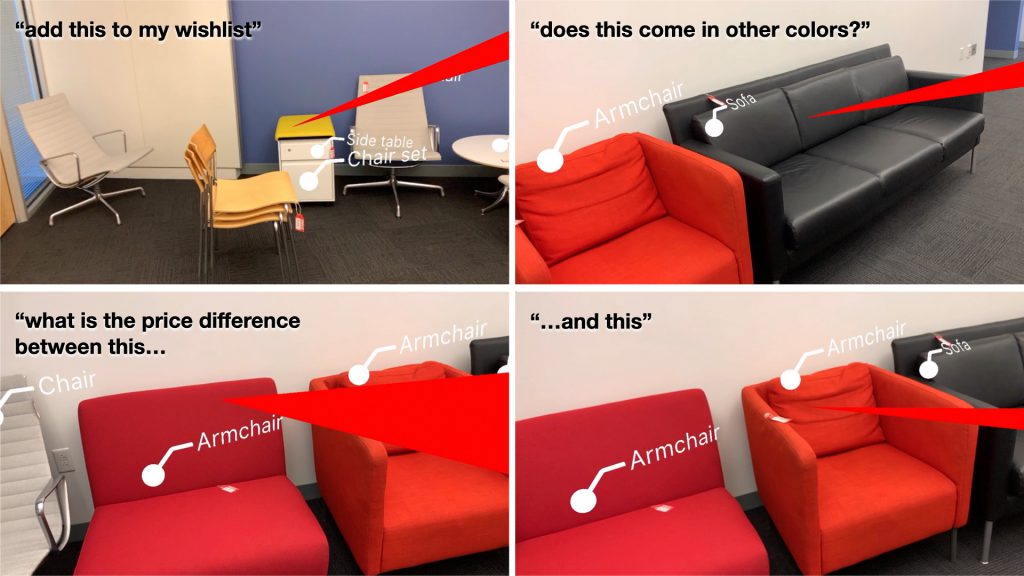

Retail

Retail settings are also ripe for augmentation, as they are full of a great variety of objects that customers might wish to know more information about. For example, a customer could ask, “does this come in any other colors?” in regard to a sofa they are evaluating. Likewise, they could also say “add this to my wishlist”. It would also be trivial to extend WorldGaze to handle multiple sequential targets, allowing for comments such as “what is the price difference between this… and this.”

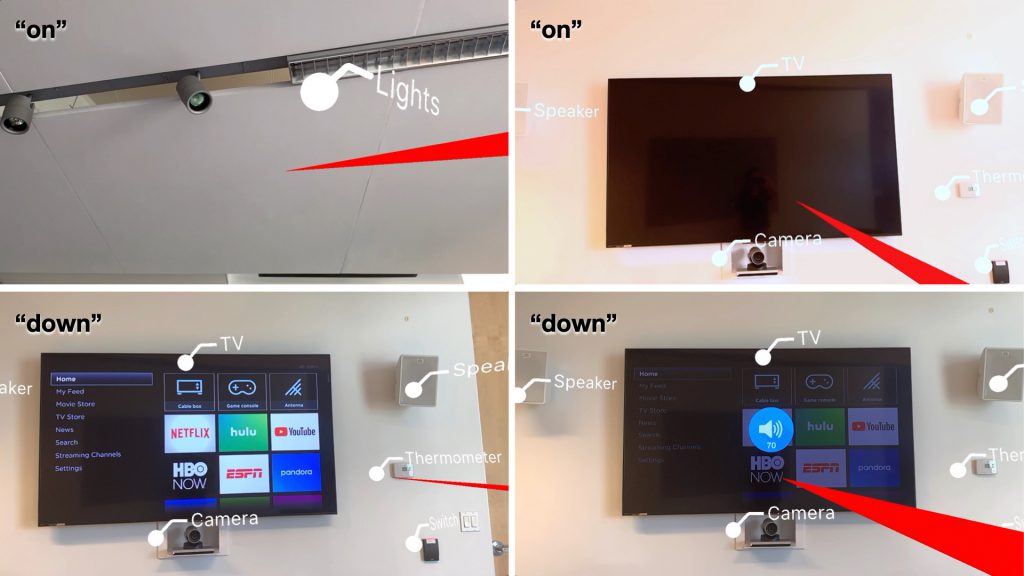

Smart Homes and Offices

WorldGaze could also facilitate rapid interactions in density instrumented smart environments, automatically resolving otherwise ambiguous verbs, such as play, go, and stop. For example, a user could say “on” to lights or a TV, or “down” to a TV or thermostat. WorldGaze offers the necessary context to resolve these ambiguities and trigger the right command.

Further Information: full-paper PDF, YouTube

Press Coverage: vision-systems.com

Comments